Richard Socher and Bryan McCann, recognized as highly cited AI researchers globally, have published 35 predictions for 2026 (URL: https://bit.ly/You_013126Predictions). Among these, three notable predictions include:

The large language model (LLM) revolution will experience a period of resource reallocation, with investment shifting back towards foundational research.

“Reward engineering” is anticipated to emerge as a distinct profession, as current prompting methods may become insufficient for future AI advancements.

Traditional coding practices are projected to be largely superseded by December, with AI systems assuming coding responsibilities and human oversight managing the process.

The complete set of 35 predictions can be accessed for further review (URL: https://bit.ly/You_013126CTA).

This week’s system design refresher explores several critical topics:

Employment opportunities are currently available at ByteByteGo.

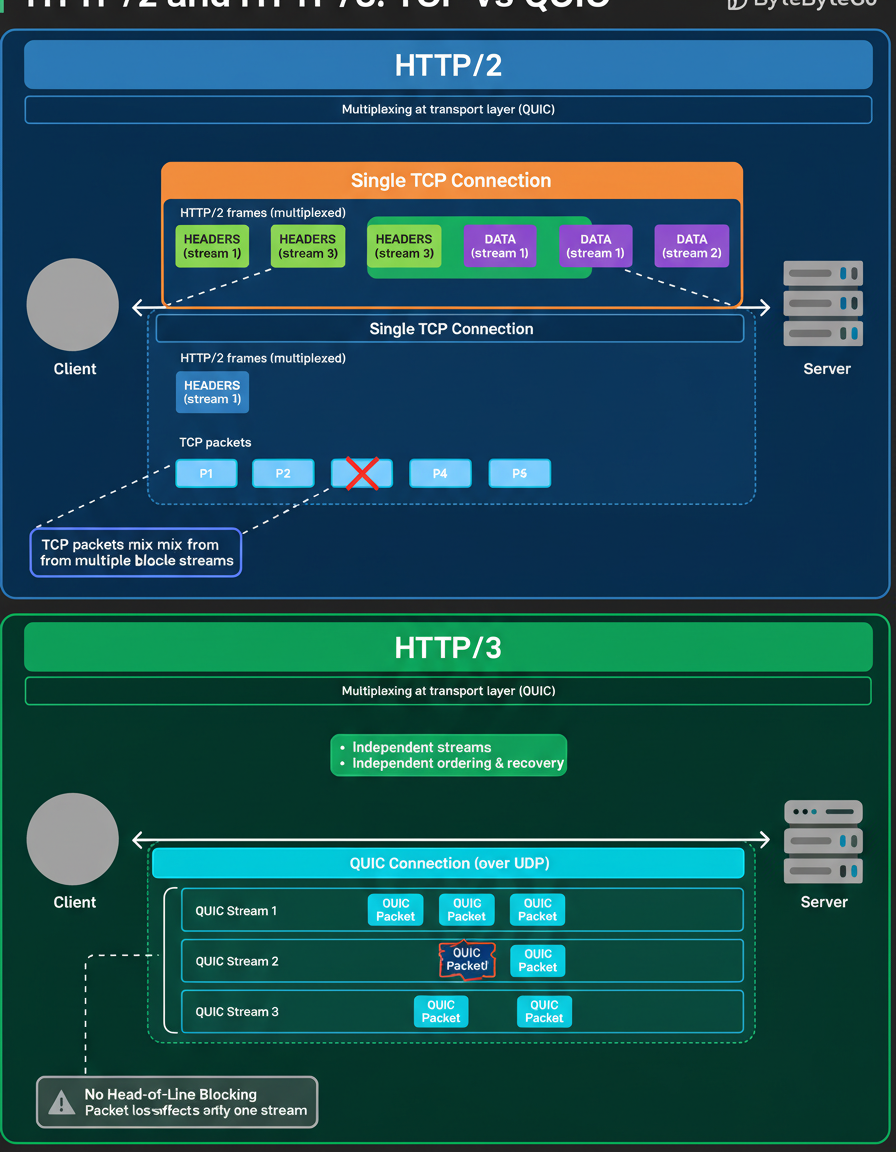

The evolution from HTTP/2 to HTTP/3 represents more than a mere protocol upgrade; it signifies a fundamental re-evaluation of the transport layer.

HTTP/2 addressed a significant limitation of HTTP/1.1, specifically the issue of excessive connections. It incorporated multiplexing, enabling multiple requests and responses to share a singular connection, which appears to be an optimal solution in theory for effective capacity planning.

However, HTTP/2 operates on TCP at its core. Consequently, all streams utilize the same TCP connection, adhering to shared ordering and congestion control mechanisms. When even a single TCP packet is lost, TCP inherently pauses data delivery until the lost packet is successfully retransmitted.

Given that individual packets can carry data from various streams, a single packet loss can consequently impede all active streams. This phenomenon is known as TCP head-of-line blocking. Data is multiplexed at the HTTP layer but serialized at the transport layer.

HTTP/3 adopts a distinct methodology. Instead of relying on TCP, it operates over QUIC, which is constructed upon UDP. QUIC effectively integrates multiplexing directly into the transport layer itself.

Under QUIC, each stream functions independently, possessing its own sequencing and recovery mechanisms. Should a packet be lost, only the specific affected stream experiences a delay, while other streams continue their transmission unimpeded. This mirrors the concept at the HTTP layer but manifests with considerably different behavior on the wire, impacting the efficiency of edge network requests per minute.

Key distinctions are observed in their multiplexing strategies:

HTTP/2: Multiplexing implemented above TCP

HTTP/3: Multiplexing integrated within the transport layer

Readers are invited to consider whether TCP head-of-line blocking has been observed in their practical system implementations or if it primarily remains a theoretical concern in their experience.

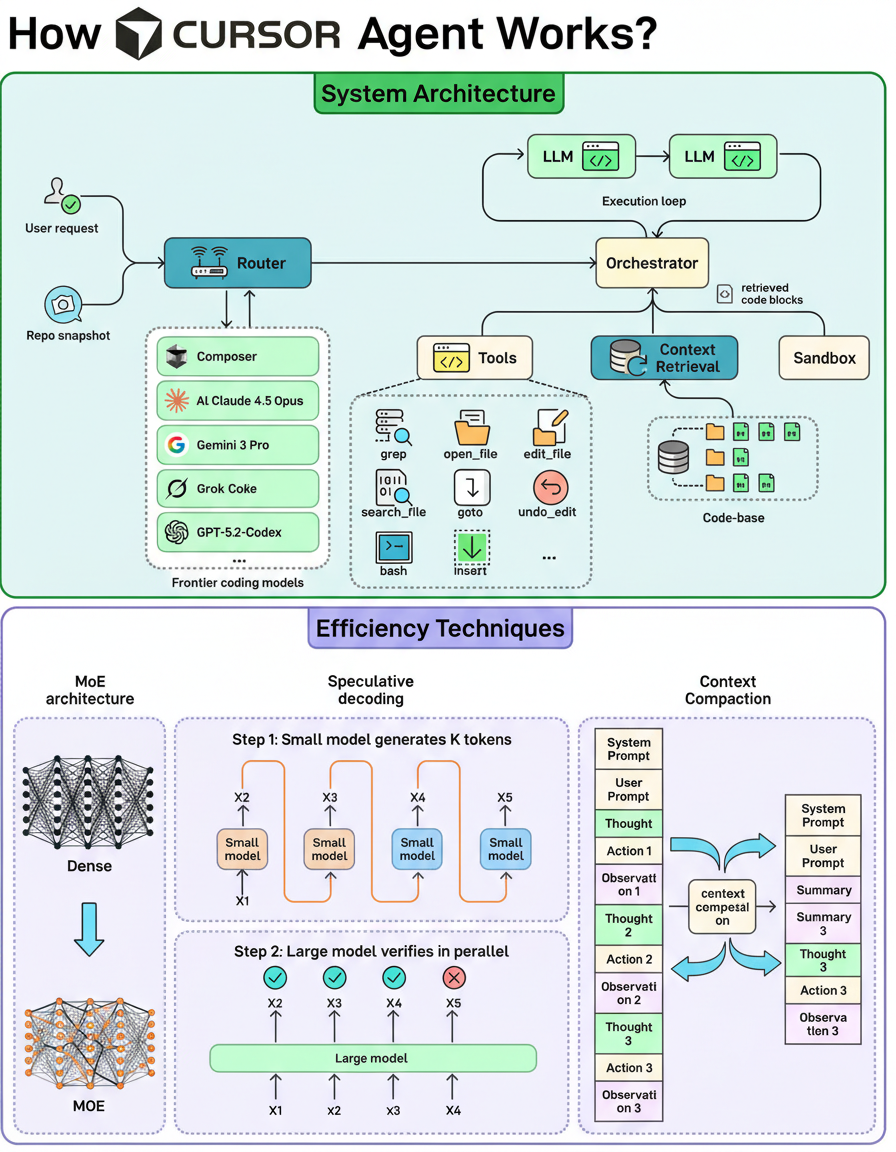

Cursor has recently launched Composer, its agentic coding model, reporting that this agent can significantly enhance development speed, achieving approximately 4 times faster execution.

Collaborations with the Cursor team, notably with Lee Robinson (URL: https://www.linkedin.com/in/leeerob/), provided insights into the system’s architecture and the underlying factors contributing to its speed.

A coding agent functions as a system capable of interpreting a given task, navigating a repository, modifying multiple files, and iteratively refining code until all build processes and tests are successfully completed.

Within the Cursor environment, an internal router initially selects an appropriate coding model (including Composer) to process incoming requests.

Subsequently, the system initiates a procedural loop: it retrieves the most pertinent code segments (context retrieval), employs various tools to open and modify files, and executes commands within a sandboxed environment. The task is deemed complete upon successful passage of all tests.

To maintain the rapid execution of this iterative process, Cursor employs three primary techniques:

The comprehensive newsletter detailing this can be found here (URL: https://blog.bytebytego.com/p/how-cursor-shipped-its-coding-agent).

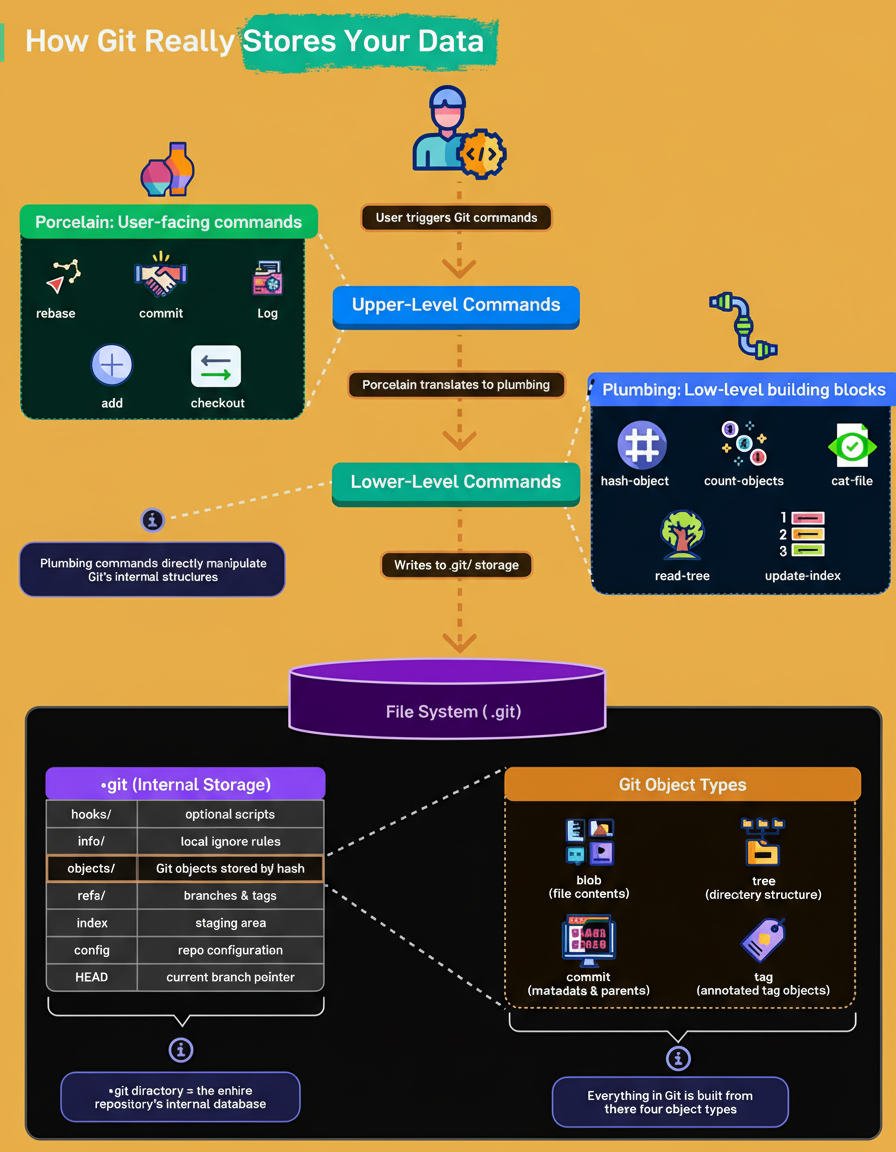

Many developers frequently utilize Git for version control, executing commands such as add, commit, or checkout, yet few possess an in-depth understanding of its internal operations. This section delves into the underlying mechanisms.

Git’s architecture is structured into two distinct layers:

add, commit, checkout, and rebase, among others.hash-object, cat-file, read-tree, and update-index.When a Git command is initiated:

.git directory, which functions as Git’s complete internal database.The .git directory houses all essential components required to reconstruct a repository. Key components include:

objects/: Stores all file content and associated metadata, indexed by hash.refs/: Contains references for branches and tags.index: Represents the staging area for changes.config: Holds the repository’s specific configuration settings.HEAD: A pointer indicating the currently active branch.It is crucial to understand that the .git folder constitutes the entirety of a repository; its deletion would result in the irreversible loss of the project’s historical data.

Fundamentally, all elements within Git are constructed from a combination of just four object types:

blob: Represents the actual file contents.tree: Organizes directories and their contents.commit: Contains metadata about a change, including references to parent commits.tag: Provides an annotated reference to specific points in history.Readers are encouraged to share which Git command has proven most perplexing in their practical development projects.

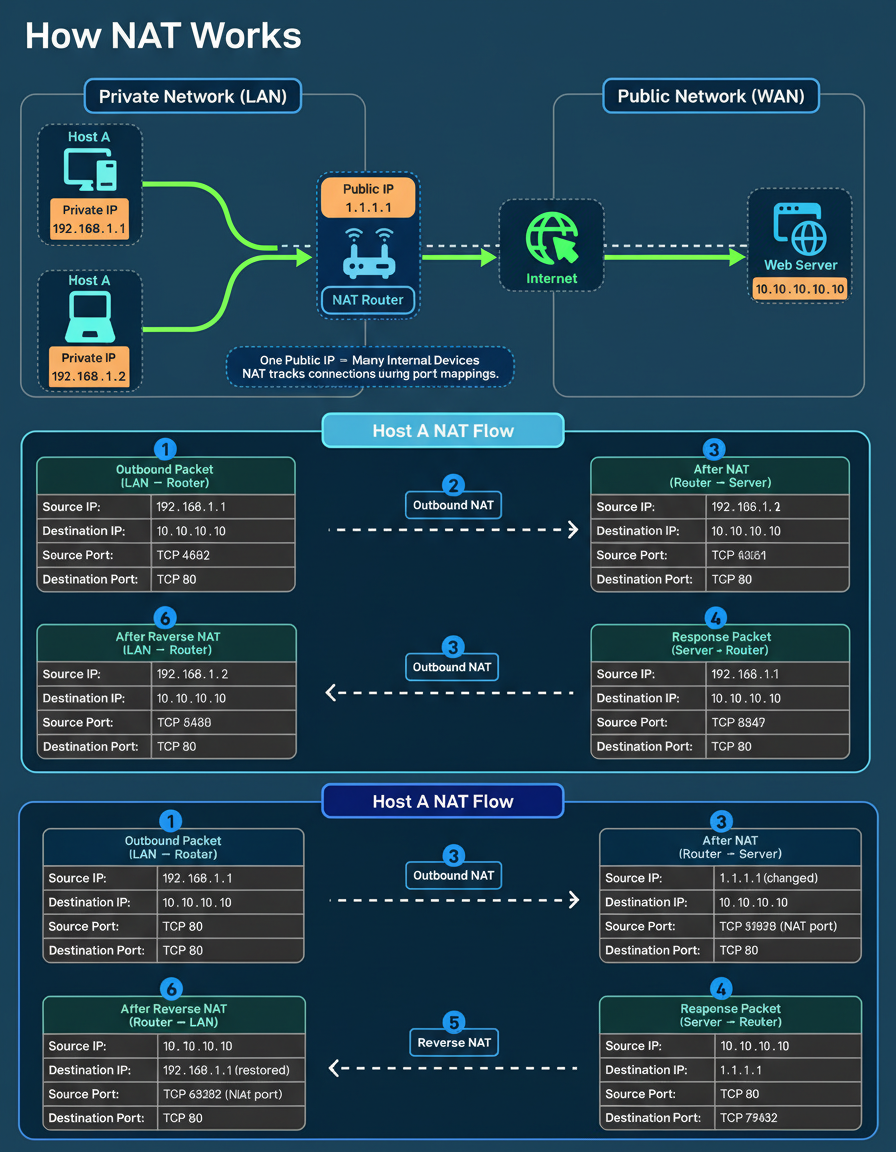

Within a typical household, numerous devices often share a single public IP address while maintaining independent browsing, streaming, and connection capabilities.

The mechanism enabling this seemingly complex feat is Network Address Translation (NAT), a crucial, often unseen, component of modern networking infrastructure. NAT has played a significant role in mitigating the exhaustion of IPv4 addresses and allows routers to conceal multiple internal devices behind a singular public IP.

The Core Idea: Within a local network, devices operate using private IP addresses that are confined to the internal network. Conversely, the router utilizes a single public IP address for all communications with external networks.

NAT performs the function of rewriting each outgoing network request, making it appear to originate from the public IP address, and simultaneously allocates a unique port mapping for every internal connection.

When an internal device dispatches a request:

Upon the return of a response packet:

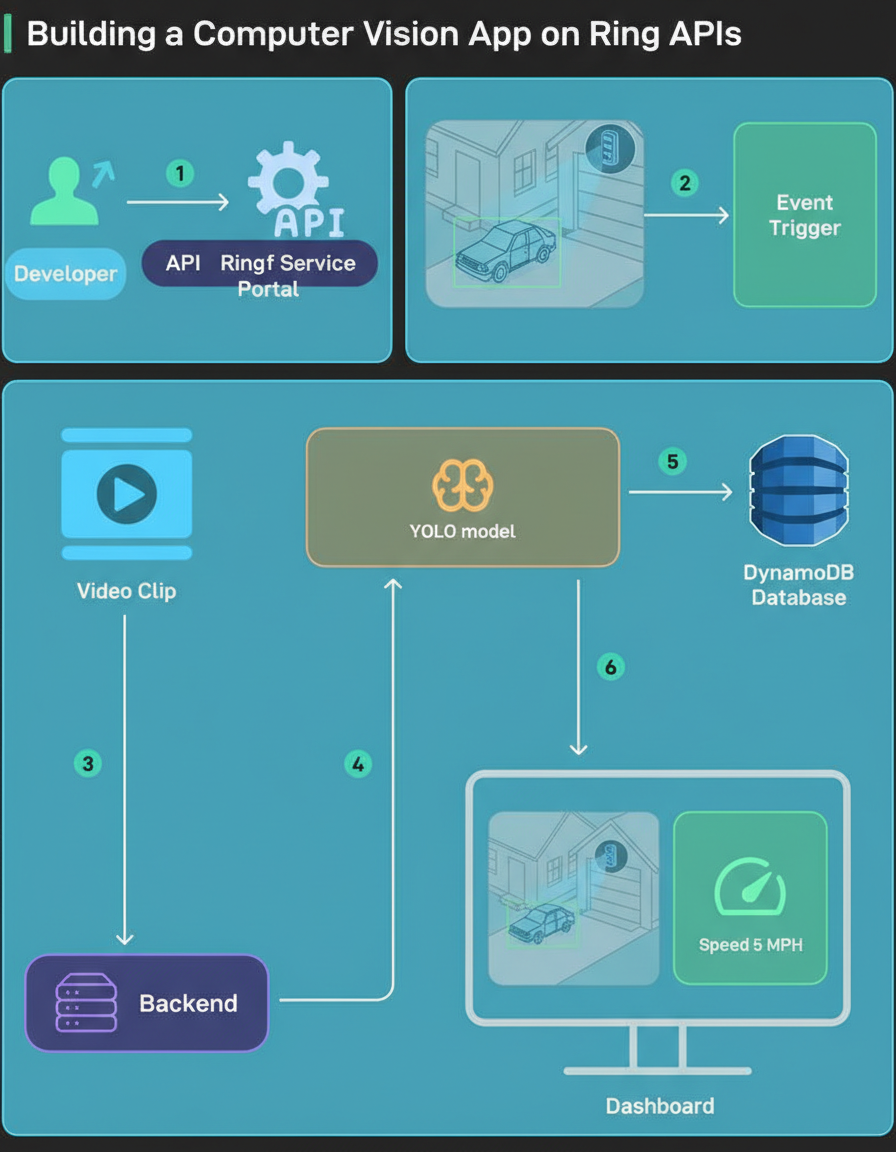

Ring has announced the launch of a new Appstore, providing third-party developers with an unprecedented opportunity to request early access to Ring APIs.

This initiative transforms Ring from a proprietary product into an extensible, programmable platform.

One of the initial teams gaining early access to the Ring API explored the potential for developers to innovate with Ring event data and assessed the speed of bringing such applications to production.

A “Driveway Derby Detector” application was developed. Its high-level operational flow is as follows:

Developers interested in replicating this project can request early access through the provided link (URL: https://bit.ly/bbg-ring-developer).