System Design Essentials: Databases, Messaging, HTTP & DNS Explained

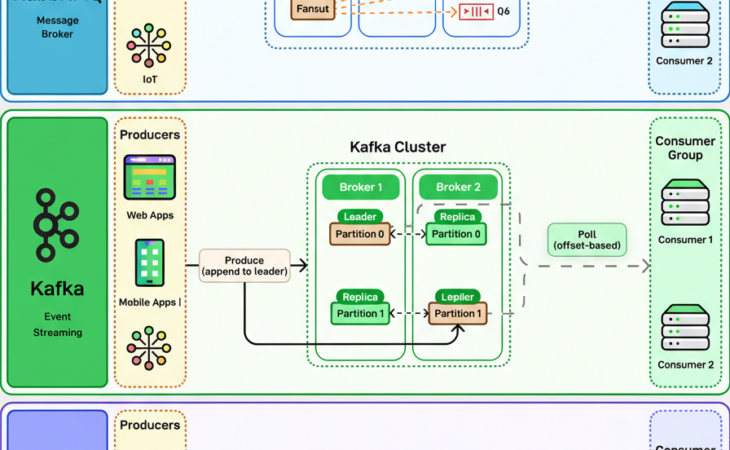

This week’s system design refresher covers various fundamental concepts including Transformers Step-by-Step Explained (Youtube video), Database Types essential for 2025, a comparison of Apache Kafka vs. RabbitMQ, an exploration of The HTTP Mindmap, and a detailed explanation of How DNS Works, alongside an inquiry into real-time updates from web servers. Transformers Step-by-Step Explained (Attention Is

Read More